Haptic technology could act as the key in actualizing the physicality expected in many experiences such as in virtual reality, gaming, education and affective communication.

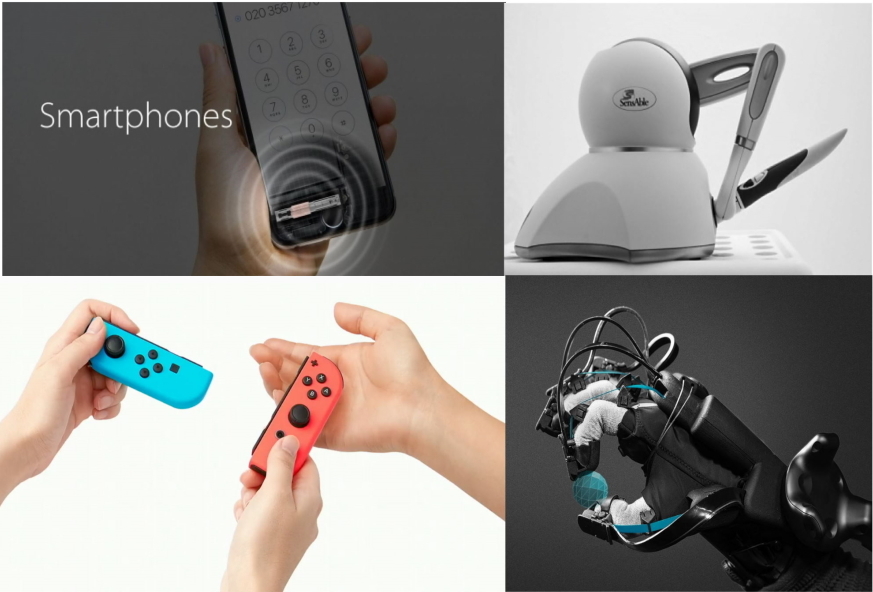

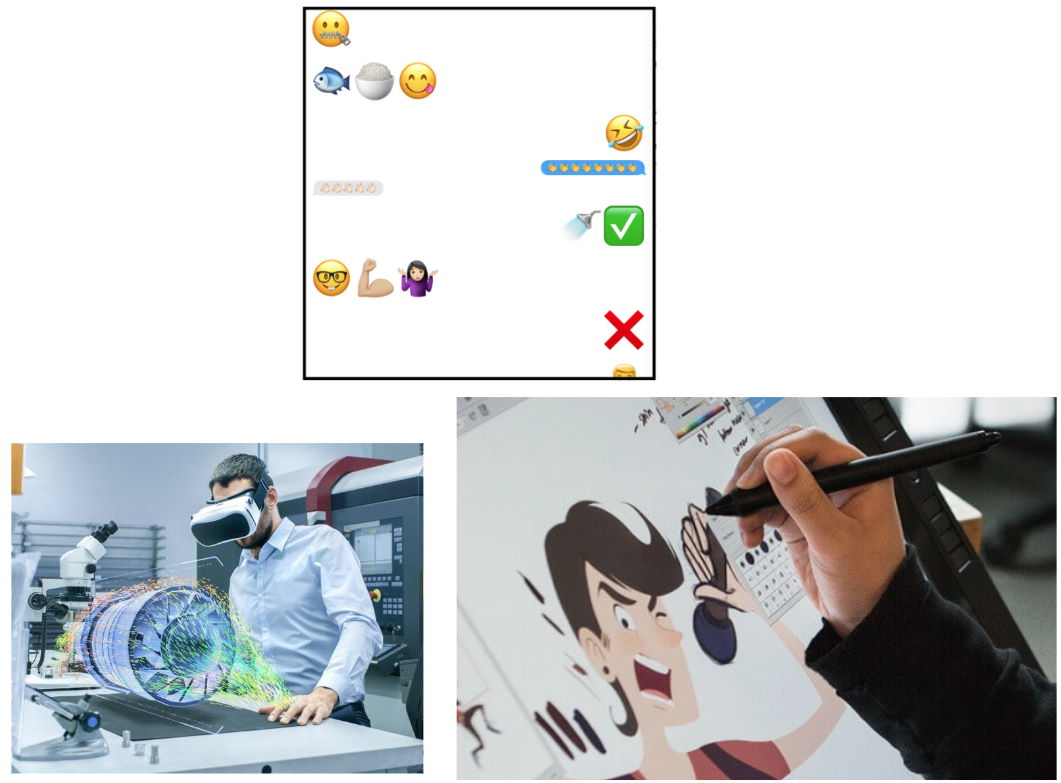

However, with so many types of haptic technologies out there, such as smartphones/watches, game controllers, and more, what is the best means for designing with haptic technology to support a physical experience?

Credit: Forbes (Left), Hacker Noon (Middle), Top Chartex (Right).

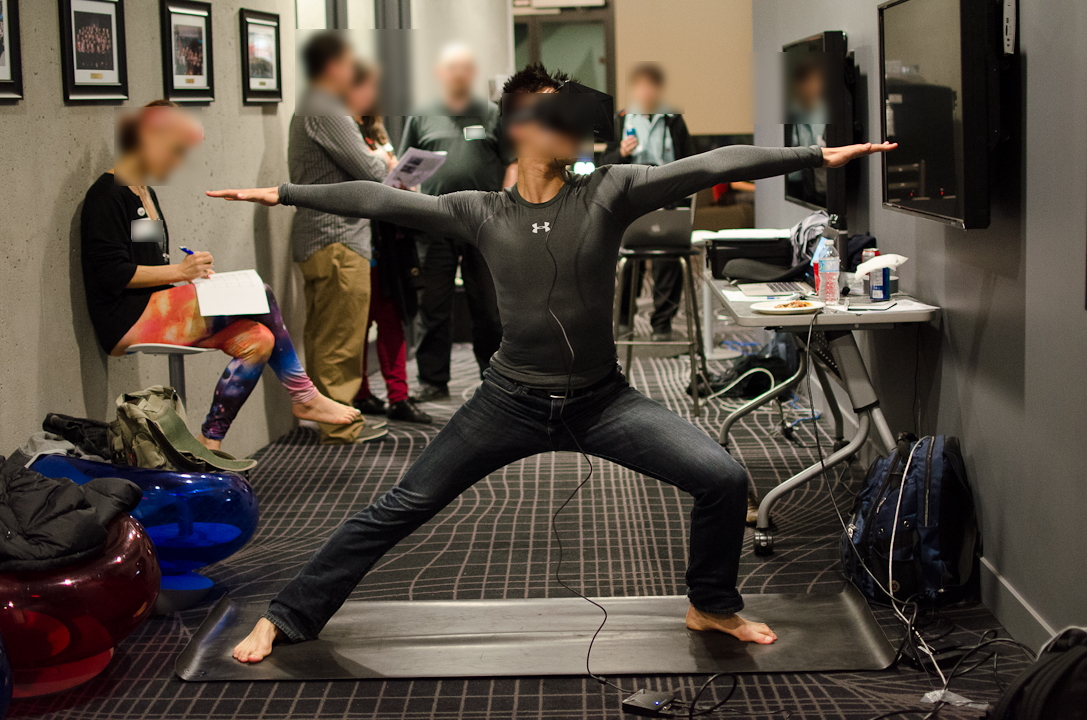

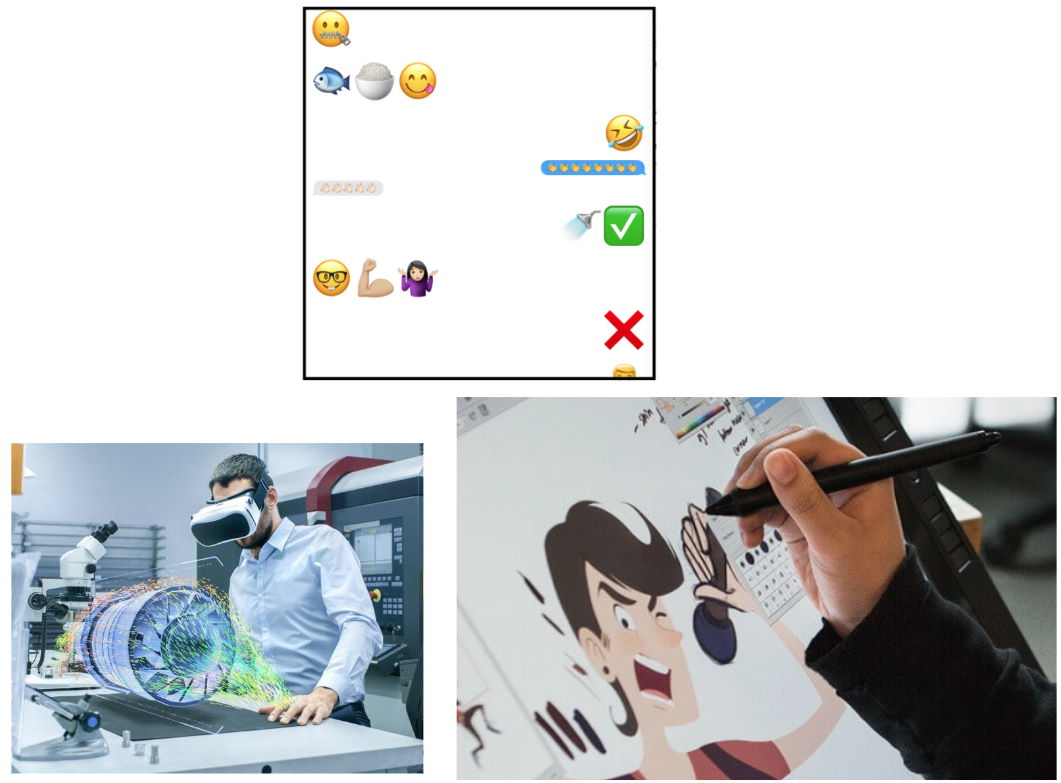

Being able to feel physical objects, metaphors of emotions, and constraints could be helpful for VR, affective communication, and physical gesture training.

Credit: IT Pro Today (Top Left), Stephen Brewster (Top Right), My Nintendo News (Bottom Left), Tech Spot (Bottom Right).

The many types of haptic technologies out there.

This challenge is what I sought to address in my time as a student at the Sensory Perception and Interaction (SPIN) group at the University of British Columbia by researching the conceptual and technical design processes of haptic interactions.

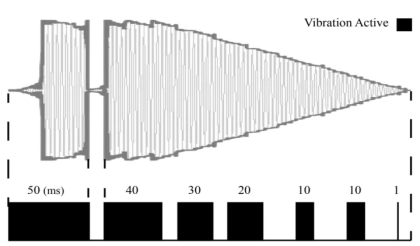

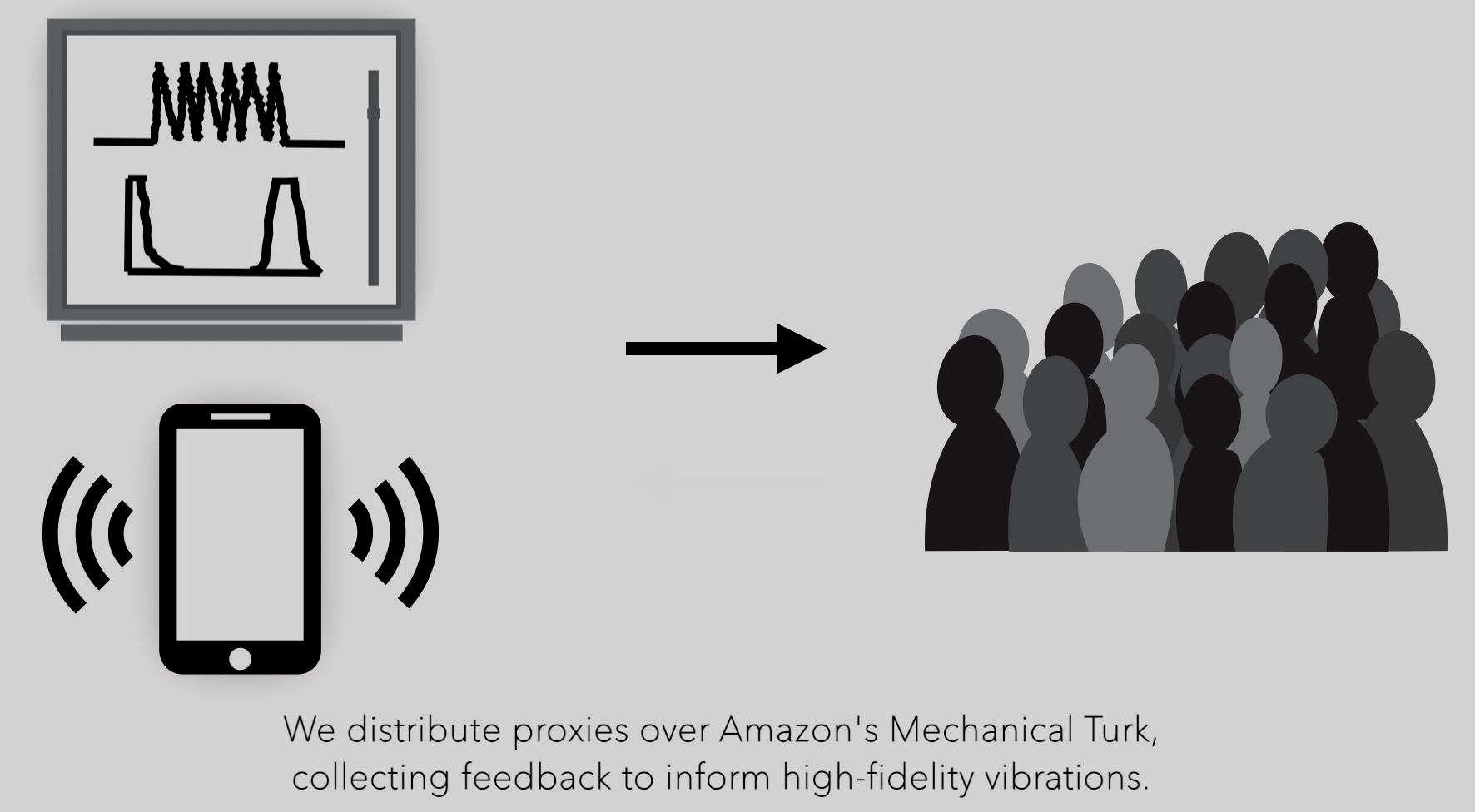

In one project, I worked with a PhD student on a haptic effect prototyping tool called Macaron, that allowed haptic designers to create, refine, and mix elements of different vibrotactile effects to quickly create new effects for smartphones and smartwatches. My role was to develop quality of life features such as saving/loading effects using the React framework. The tool is linked to the left panel.

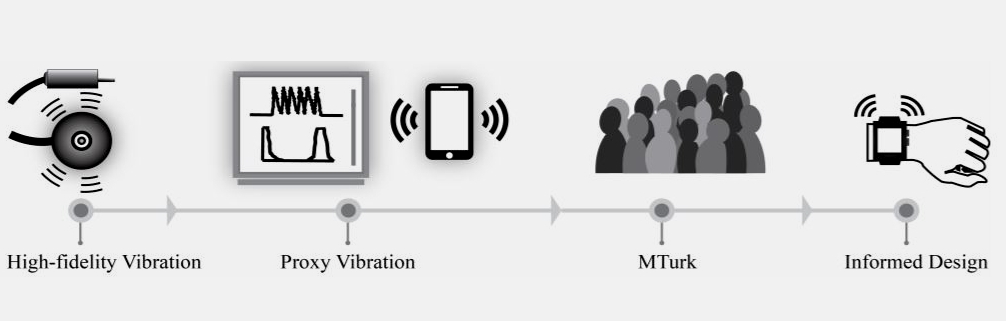

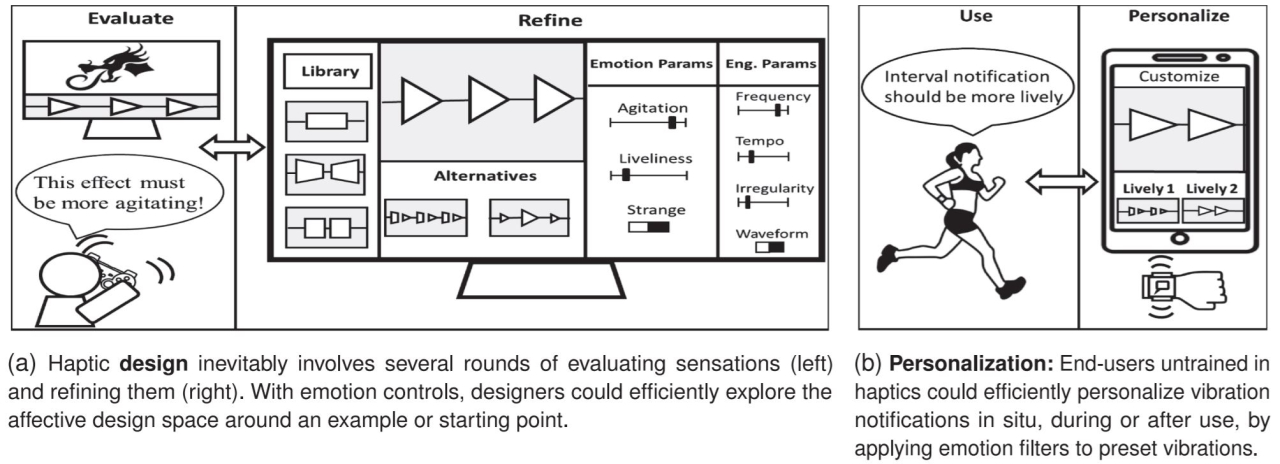

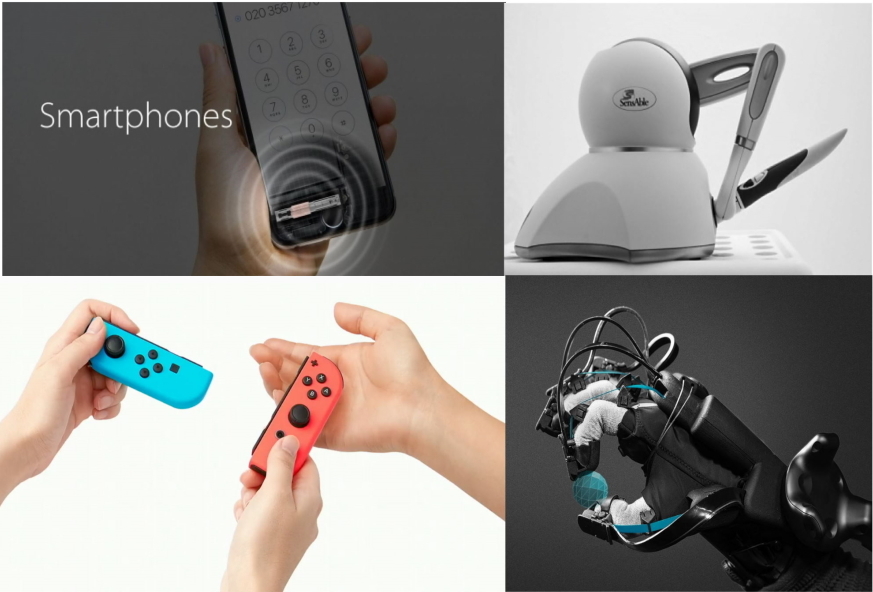

In another project, I worked with another PhD student to research and conceptually design an interface for tuning the affective and pragmatic qualities of vibrations such that they would meet the demands of various situations that smartphone/watch users would appreciate (eg. useful notifications, affective communication, etc.). The associated publication is linked to the left panel.

How haptic designers and end users could conceptually benefit from tuning haptic effects parametrically.

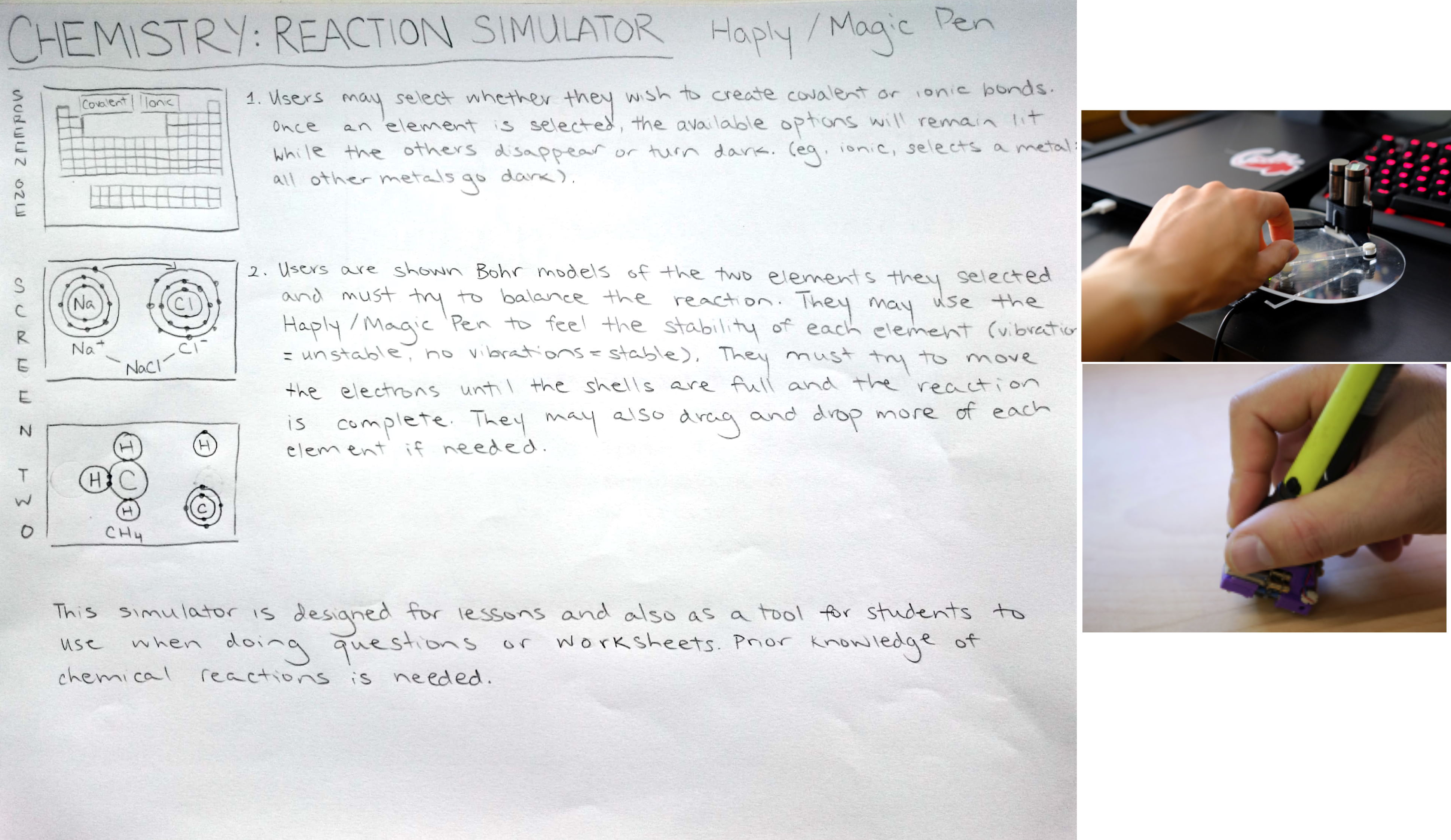

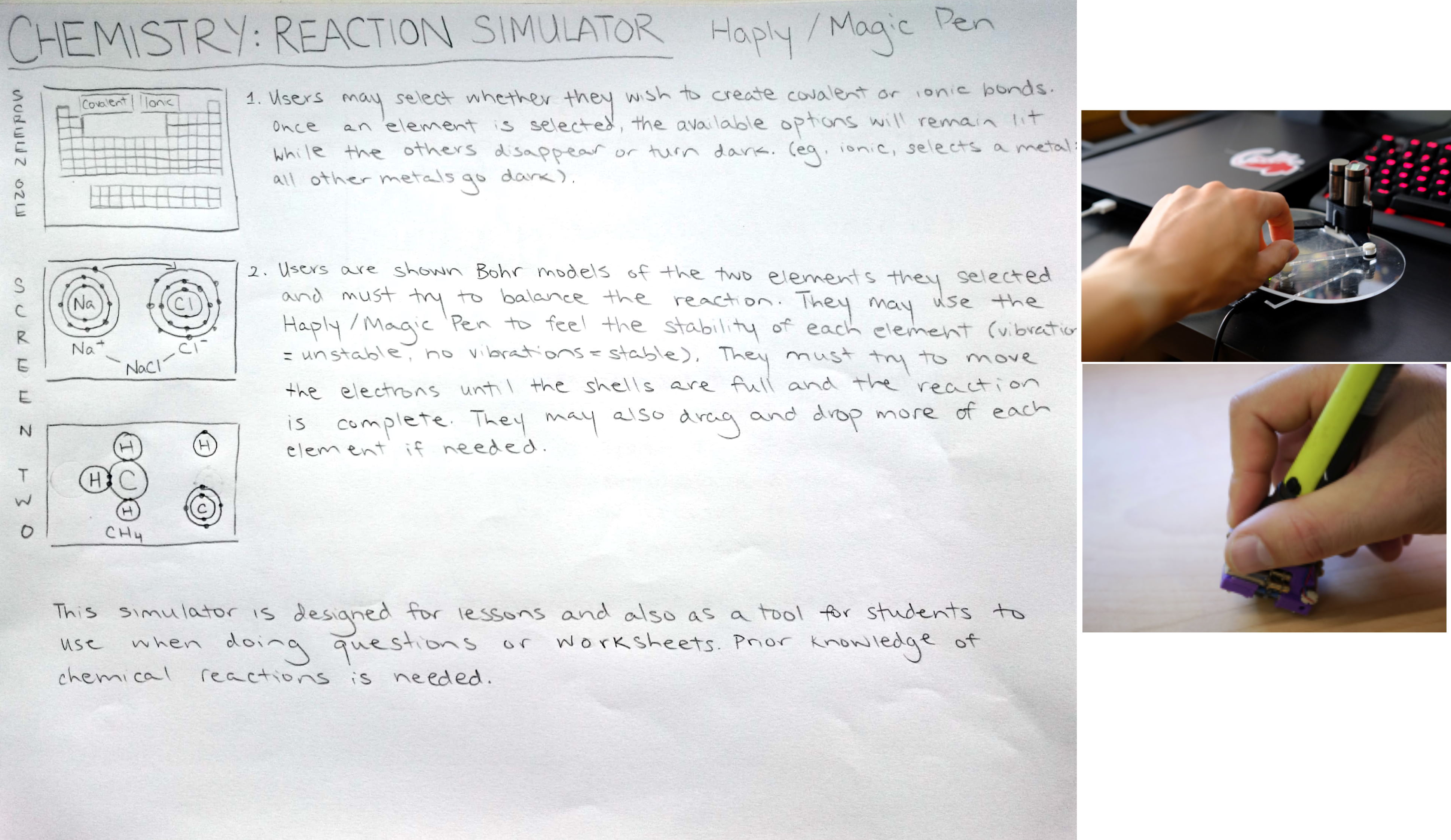

I also worked with secondary school students to identify possible ways for haptic technology to support educational experiences. We analyzed existing STEM and classroom approaches, in order to ideate possible haptic designs that would teach STEM concepts in a physical manner. The students practiced core HCI research methodologies such as user interviews, cognitive walkthroughs, focus group interviews, qualitative analysis and much more.

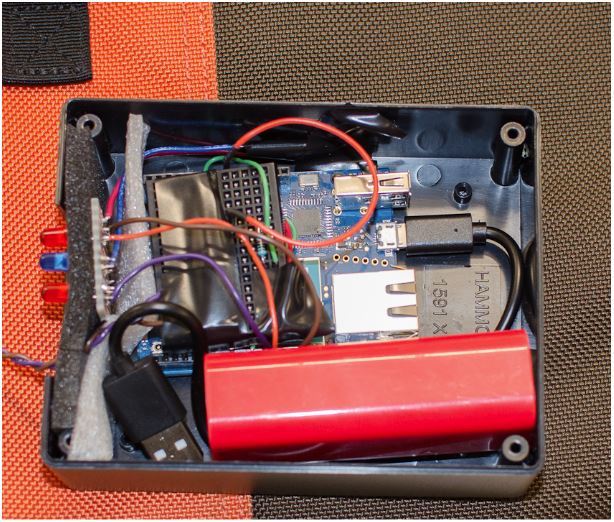

Credit: UBC SPIN Lab (Magic Pen, Bottom), Haply Robotics (Haply, Top). An example conceptual sketch of how 2 haptic devices could be used for educational purposes. Design created by secondary school students.

My Master’s thesis systematically assessed the literature of different academic communities and compared their design practices in creating haptic applications. Specifically, the interactive design and engineering/psychological communities were characterized on the technical and conceptual aspects of designing haptic applications. The results suggested different ways in which each community's strengths/gaps in haptic design could be bolstered by the practices of another community.